2025’s Fiercest Women in Life Sciences

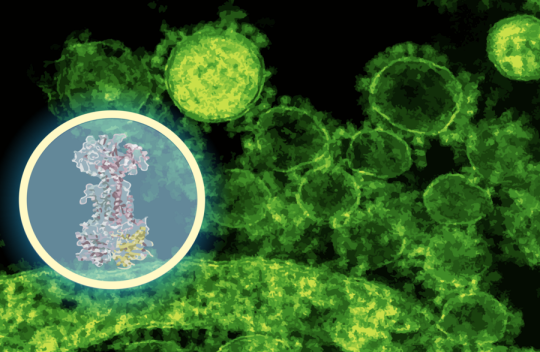

The life sciences mission of Najat Khan, Ph.D., was forged in hospital hallways.Her parents, a trauma surgeon and a gynecologist-turned-radiologist, “didn't believe in babysitters,” she joked in an interview. The extensive time she therefore spent in hospitals throughout her childhood showed Khan the life-changing impact of innovative medicines and set her on the path to the C-suites of Johnson & Johnson and Recursion Pharmaceuticals. Learn more