Author: Alex Ouyang

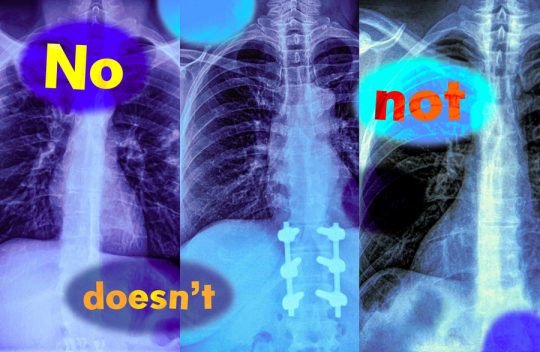

AI doesn’t know ‘no’ – and that’s a huge problem for medical bots

Toddlers may swiftly master the meaning of the word “no”, but many artificial intelligence models struggle to do so. They show a high fail rate when it comes to understanding commands that contain negation words such as “no” and “not”.That could mean medical AI models failing to realise that there is a big difference between an X-ray image labelled as showing “signs of pneumonia” and one labelled as showing “no signs of pneumonia” – with potentially catastrophic consequences if physicians rely on AI assistance to classify images when making diagnoses or prioritising treatment for certain patients.

It might seem surprising that today’s sophisticated AI models would struggle with something so fundamental. But, says Kumail Alhamoud at the Massachusetts Institute of Technology, “they’re all bad [at it] in some sense”. Learn more

Study shows vision-language models can’t handle queries with negation words

Imagine a radiologist examining a chest X-ray from a new patient. She notices the patient has swelling in the tissue but does not have an enlarged heart. Looking to speed up diagnosis, she might use a vision-language machine-learning model to search for reports from similar patients.But if the model mistakenly identifies reports with both conditions, the most likely diagnosis could be quite different: If a patient has tissue swelling and an enlarged heart, the condition is very likely to be cardiac related, but with no enlarged heart there could be several underlying causes.

In a new study, MIT researchers have found that vision-language models are extremely likely to make such a mistake in real-world situations because they don’t understand negation — words like “no” and “doesn’t” that specify what is false or absent. Learn more

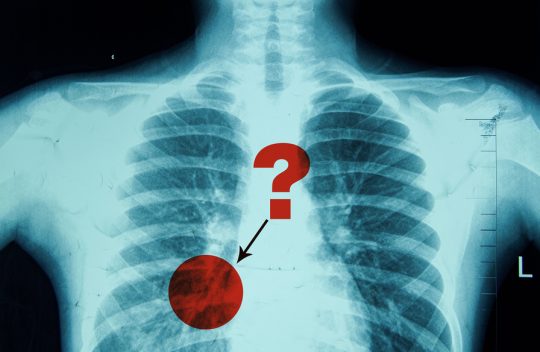

New method assesses and improves the reliability of radiologists’ diagnostic reports

Due to the inherent ambiguity in medical images like X-rays, radiologists often use words like “may” or “likely” when describing the presence of a certain pathology, such as pneumonia. But do the words radiologists use to express their confidence level accurately reflect how often a particular pathology occurs in patients? A new study shows that when radiologists express confidence about a certain pathology using a phrase like “very likely,” they tend to be overconfident, and vice-versa when they express less confidence using a word like “possibly.” Using clinical data, a multidisciplinary team of MIT researchers in collaboration with researchers and clinicians at hospitals affiliated with Harvard Medical School created a framework to quantify how reliable radiologists are when they express certainty using natural language terms. Learn more

MIT faculty, alumni named 2025 Sloan Research Fellows

Marzyeh Ghassemi PhD ’17 is an associate professor within EECS and the Institute for Medical Engineering and Science (IMES). Ghassemi earned two bachelor’s degrees in computer science and electrical engineering from New Mexico State University as a Goldwater Scholar; her MS in biomedical engineering from Oxford University as a Marshall Scholar; and her PhD in computer science from MIT. Following stints as a visiting researcher with Alphabet’s Verily and an assistant professor at University of Toronto, Ghassemi joined EECS and IMES as an assistant professor in July 2021. (IMES is the home of the Harvard-MIT Program in Health Sciences and Technology.) She is affiliated with the Laboratory for Information and Decision Systems (LIDS), the MIT-IBM Watson AI Lab, the Abdul Latif Jameel Clinic for Machine Learning in Health, the Institute for Data, Systems, and Society (IDSS), and CSAIL. Ghassemi’s research in the Healthy ML Group creates a rigorous quantitative framework in which to design, develop, and place machine learning models in a way that is robust and useful, focusing on health settings. Her contributions range from socially-aware model construction to improving subgroup- and shift-robust learning methods to identifying important insights in model deployment scenarios that have implications in policy, health practice, and equity. Among other awards, Ghassemi has been named one of MIT Technology Review’s 35 Innovators Under 35 and an AI2050 Fellow, as well as receiving the 2018 Seth J. Teller Award, the 2023 MIT Prize for Open Data, a 2024 NSF CAREER Award, and the Google Research Scholar Award. She founded the nonprofit Association for Health, Inference and Learning (AHLI) and her work has been featured in popular press such as Forbes, Fortune, MIT News, and The Huffington Post. Learn more