Tag: clinical AI

MIT and Mass General Hospital researchers find disparities in organ acceptance

Most computational research in organ allocation is focused on the initial stages, when waitlisted patients are being prioritized for organ transplants. In a new paper presented at ACM Conference on Fairness, Accountability, and Transparency (FAccT) in Athens, Greece, researchers from MIT and Massachusetts General Hospital focused on the final, less-studied stage: organ offer acceptance, when an offer is made and the physician at the transplant center decides on behalf of the patient whether to accept or reject the offered organ. Learn more

Can AI Predict Breast Cancer? How a Scientist’s Personal Challenge Launched a Professional Mission

When Regina Barzilay was diagnosed with breast cancer in 2014, it upended her life and shifted the direction of her research. Already an accomplished computer scientist specializing in natural language processing, her experience as a patient shed light on the possibility of new applications for machine learning and revealed a stark disconnect between technology’s promise and its implementation in health care. “It was upsetting to see that all these great technologies are not translated into patient care,” she recalls. “I wanted to change it.” After going through her own treatment, Barzilay’s work took on an urgent new focus: could the very technologies she used in her research predict who might be at risk for breast cancer? Learn more

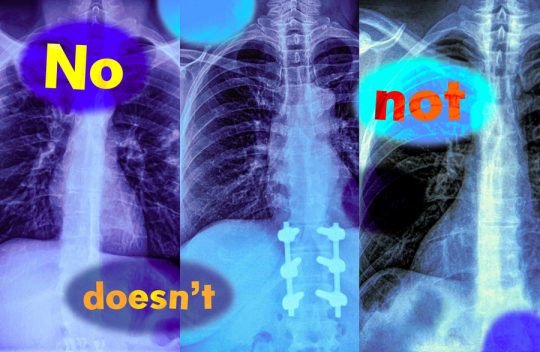

AI doesn’t know ‘no’ – and that’s a huge problem for medical bots

Toddlers may swiftly master the meaning of the word “no”, but many artificial intelligence models struggle to do so. They show a high fail rate when it comes to understanding commands that contain negation words such as “no” and “not”.That could mean medical AI models failing to realise that there is a big difference between an X-ray image labelled as showing “signs of pneumonia” and one labelled as showing “no signs of pneumonia” – with potentially catastrophic consequences if physicians rely on AI assistance to classify images when making diagnoses or prioritising treatment for certain patients.

It might seem surprising that today’s sophisticated AI models would struggle with something so fundamental. But, says Kumail Alhamoud at the Massachusetts Institute of Technology, “they’re all bad [at it] in some sense”. Learn more

Study shows vision-language models can’t handle queries with negation words

Imagine a radiologist examining a chest X-ray from a new patient. She notices the patient has swelling in the tissue but does not have an enlarged heart. Looking to speed up diagnosis, she might use a vision-language machine-learning model to search for reports from similar patients.But if the model mistakenly identifies reports with both conditions, the most likely diagnosis could be quite different: If a patient has tissue swelling and an enlarged heart, the condition is very likely to be cardiac related, but with no enlarged heart there could be several underlying causes.

In a new study, MIT researchers have found that vision-language models are extremely likely to make such a mistake in real-world situations because they don’t understand negation — words like “no” and “doesn’t” that specify what is false or absent. Learn more